New Feature

Beyond Fraud Detection: AI-Quality Interview Screening at Scale

Jan 6, 2026

—

Aaron Cannon

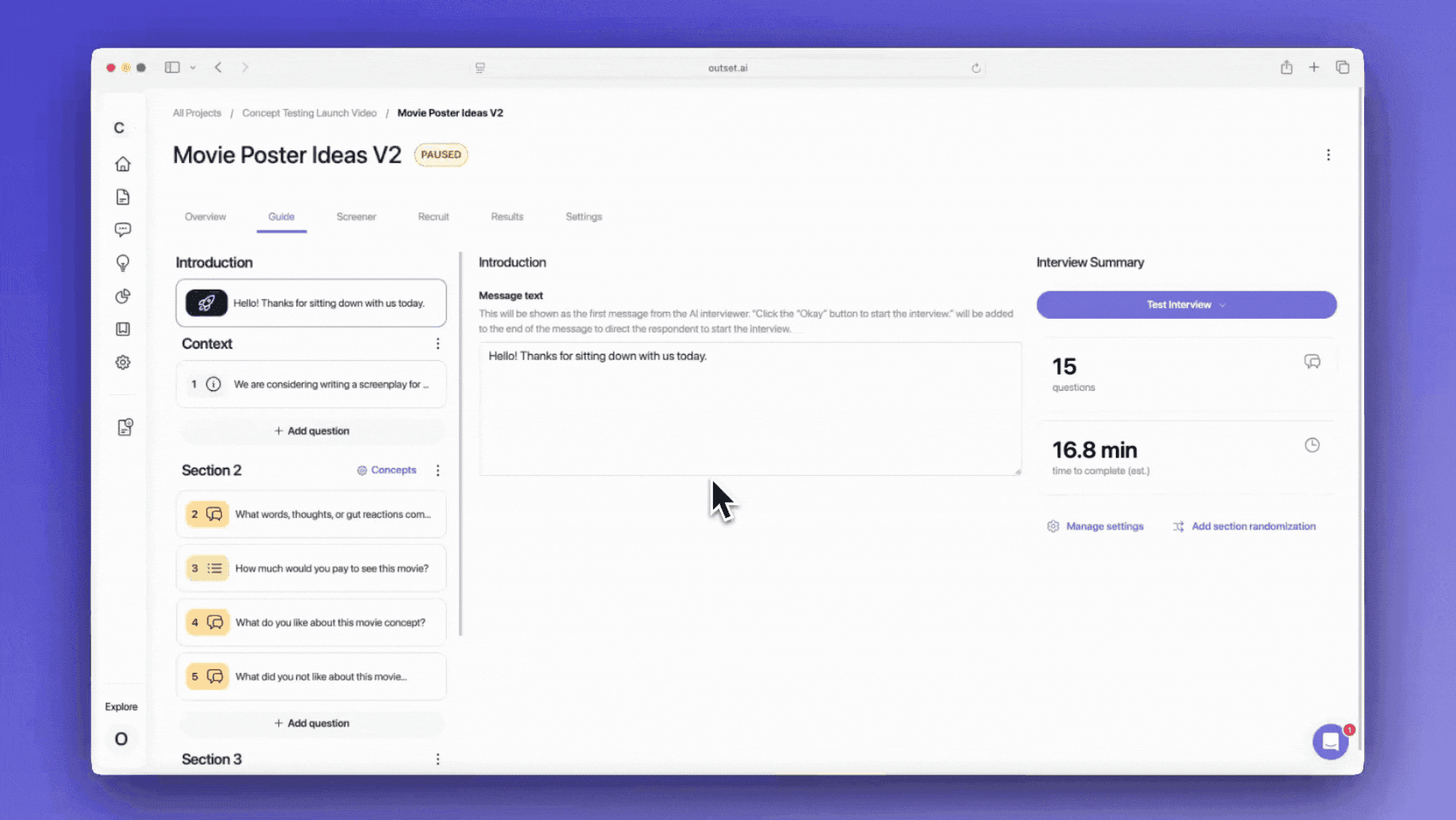

Beyond Fraud Detection: AI-Quality Interview Screening at Scale

When we launched Fraud Detection, we solved a critical problem in AI-moderated interviews: removing interviews taken fraudulently or with AI. That was a big step forward for trust.

But as more teams scaled their AI-moderated research, a different issue became clear.

Not all bad interviews are fraudulent.

Some are simply… low quality.

The real problem

These interviews technically “complete.”

They aren’t fake.

But they’re rushed, shallow, or incomplete.

And researchers have historically still paid for them…and had to remove their data manually.

That creates a frustrating reality:

Budgets are wasted on interviews that don’t move decisions forward

Teams spend time cleaning and excluding data

Stakeholders lose confidence

Fraud detection alone isn’t enough.

What we learned

Low-quality interviews usually aren’t malicious. They’re behavioral.

Participants rush. They give minimal answers. They disengage.

And crucially: once the interview is over, it’s too late to fix.

Quality needs to be addressed during the interview.

Introducing AI-Quality Screening

Today, we’re launching AI-Quality Screening, an extension of Fraud Detection that ensures interviews aren’t just legitimate, but useful.

AI-Quality Screening introduces a live quality tracker that:

Monitors response depth and completeness in real time

Warns participants when answers are low effort or incomplete

Removes participants after repeated low-quality behavior

Tags every completed interview as Low, Medium, or High quality

Fraud Detection removes invalid interviews. AI-Quality Screening ensures the rest are decision-grade.

Why this matters

Most participants improve when they’re guided in the moment.

Those who don’t are removed before they cost you time or money.

The result:

Higher effort interviews

Less post-hoc cleanup

Clear visibility into interview quality

Confidence that you’re paying for research that actually matters

A complete trust stack

Together, Fraud Detection and AI-Quality Screening form Outset’s Interview Trust Stack—built for teams who want to scale qualitative research without sacrificing rigor.

Because at scale, trust isn’t optional.

And neither is quality.

About the author

Aaron Cannon

CEO - Outset

Aaron is the co-founder and CEO of Outset, where he’s leading the development of the world’s first agent-led research platform powered by AI-moderated interviews. He brings over a decade of experience in product strategy and leadership from roles at Tesla, Triplebyte, and Deloitte, with a passion for building tools that bridge design, business, and user research. Aaron studied economics and entrepreneurial leadership at Tufts University and continues to mentor young innovators.

Interested in learning more? Book a personalized demo today!

Book Demo